In my tweets or searching the Internet you might have learned that UAG 2010 SP3 will detect Windows 8.1 clients as mobile devices.

The not supported solution presented by Risual forgets to notify you that you need to make the change in both the InternalSite web.config as well as in the PortalHomePage web.config. This is documented in the official TechNet article on how to modify the DetectionExpression.

However, they both fail to tell you that the modifications you make in the web.config files might cause your UAG 2010 to start showing IIS Error 500.

The reason is obvious if your a programmer but maybe not if your a typical IT-pro.

When following the guide from Risual for example and adding the line <DetectionExpression Name="IE11" Expression='UserAgent Contains "mozilla" AND UserAgent Contains "rv:11"' DefaultValue="false" /> you have to remember to add that line BEFORE you refer to it in a line like <DetectionExpression Name="Mobile" Expression='!IE11 AND (MobileDevice OR WindowsCE)' DefaultValue="false" />

Author: Kent

Is it possible in FIM 2010 R2 to…?

I got a few question today about FIM 2010 R2 and thought I should share the answers with you all.

The questions were:

I just want to know if the followings are possible technically and I need a way how to proceed.

- Is it possible to update or delete Managed Accounts totally, using an interface?

- Is it possible to make integration with Active Directory SAP 6.0? any tool or utility?

- Is it possible to manage the ACLs at File Server? For example can file owner manage ACL and membership on FIM Portal?

The short and quick answer to all questions is… Yes! Because basically you can do anything with FIM 2010 R2 🙂

The longer answer I will give you, will be for each question.

Is it possible to update or delete Managed Accounts totally, using an interface?

Any accounts in FIM 2010 R2 (managed or not) can be updated and deleted using the FIM 2010 R2 portal. If by “managed accounts” you mean linked accounts, like an administrative account. I also have solutions at customers where for example the linked account is automatically updated based on events on the main account.

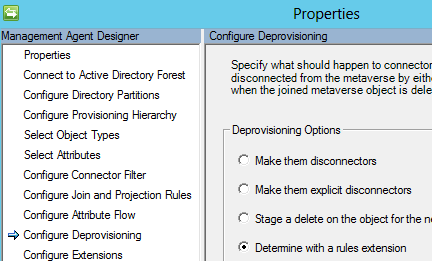

When it comes to deleting I strongly recommend reading the article on deprovisioning written by Carol Whapshere. My personal recommendation if you plan on implementing deletes, in for example AD, is that you use rules extension to make sure you can filter the deletions to only happen on certain objects.

Is it possible to make integration with Active Directory SAP 6.0? any tool or utility?

I am not that familiar with different versions of SAP. But if we look at the Management Agents available for FIM 2010 R2 I would think that the Connector for Web Services would do the trick. If it doesn’t I am pretty sure a generic adapter like PowerShell can be used to solve the integration with SAP 6.0.

Is it possible to manage the ACLs at File Server? For example can file owner manage ACL and membership on FIM Portal?

To manage folder objects (I do hope your not working with file permissions 😉 ) I would use a PowerShell connector. Microsoft has its own on Connect (Release Candidate at the moment) or you can use the great PowerShell MA developed by Søren Granfeldt. Using PowerShell it’s quite easy to work with the security descriptor on your folders. If you look at my example regarding the HomeFolder MA you might get an idea on how to do it. In FIM we need to extend the schema to hold the objects. To begin with I would have three multi-value reference attributes (Read, Modify, FullControl) to assign the permissions. You would also need to assign owner (or Manager) attribute in order to use the portal for self-service and have an MPR like “Folder Owners can manage permissions on folders they own”. In reality I would also make sure I get some new forms by adding some RCDC’s.

As you can see… We can do a lot of things in FIM! If you have follow-up questions please comment.

FIM when COTS and CBC matters!

I have been working with many FIM projects over the last 4 years. One common question in all projects is… Why FIM? A common answer is COTS and CBC.

All customers I worked with have had some kind of IdM solution in place. Commonly it’s a self-made solution and the customers have finally started to realize the true cost of maintaining this homegrown solution. They realize they want a COTS, Commercial Of The Shelf, product.

Another common request is to introduce self-service. Not only things like SSPR, Self-Service Password Reset, but also to delegate tasks like creating users and managing them. Using the built-in FIM Portal to allow this has actually proven quite useful. Many are complaining about the lack of functionality in the RCDC (FIM portal forms), but customers realizing the cost of homegrown solutions are accepting this shortcomings in order to go for CBC, Configure Before Code (or Configure Before Customize).

So if you ever wondered… Why FIM? I think a simple answer is because you want COTS and CBC!

Using PowerShell MA to replace ECMA 1.0 used for ODBC

At one of my customers they have a number of old ECMA 1.0 Management Agents that use ODBC (NotesSQL driver in this case) to talk to IBM Notes. But since ECMA 1.0 is now being deprecated it was time to look at alternatives. One option was to try and upgrade the old MA to ECMA 2.0. I did however give the PowerShell MA from Søren Granfeldt a try first. What I actually discovered was, that doing so was much easier and more cost-effective than writing new ECMA 2.0 based MA’s.

What I also discovered was that I ended up with something that could be called a “generic” ODBC MA. With only minor changes to the scripts I was able to use it for all IBM Notes adapters, including managing the Notes Address Book.

If you would like to give it a try the setting in the PS MA I used was to use the export the “old” CSEntryChange object. Since NoteSQL is only available as 32-bit driver I was also required to configure the MA to use x86 architecture. You can dowload my sample scripts here. One nice thing I am doing in this example is to read the schema.ps1 file to get all the “columns” to manage in the script. With that all you need in order to add support to read, update a new column in the ODBC source is to add it to the schema.ps1 file.

HomeFolder script for PowerShell MA

A short while ago Søren Granfeldt released a new version of his fantastic PowerShell MA. One of the nice things is that it now supports sending error messages back to the MA. I implemented it this week, for Home Folder management, at a customer and this resulted in a new example script I wanted to share with you all.

The new example script can be downloaded here: PSMA.4.5.HomeFolder.Example. This sample script is based on using the “old” CSEntryChange object, rather then configuring it to use the new feature in this MA allowing you to Export simple objects, that will use PSCustomObject instead.

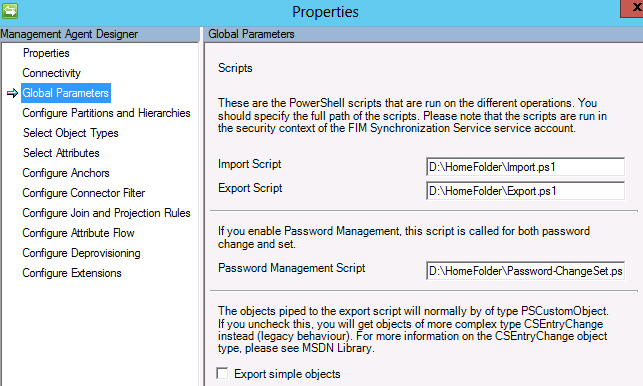

The Global Parameters for the MA this script is used in, is set as in the picture below.

Microsoft Forefront UAG Mobile Configuration Starter

Are you planning to allow mobile devices in your company and realize you need a secure way of publishing the resources that the clients will access?

Well then I suggest you take a look at the Microsoft Forefront Mobile Configuration Starter book written by Fabrizio Volpe. In this book Fabrizio gives you an easy to follow guideline to get you started with using UAG as your mobile access solution. He also gives you many pointers to resources where you can dig deeper into the mystery of allowing mobile devices access to your internal resources.

I also recommend you take a look at my earlier post on how to use KCD to secure your infrastructure where i discuss how KCD can be used to secure mobile device access.

Using SharePoint Foundation 2013 with FIM

With the new SP1 released for FIM 2010 R2 it is now supported to use SharePoint Foundation 2013 on the FIM Portal server. Installing and configuring SPF 2013 to work with FIM is however not that straight forward. In this post I will tell you how to do it and also give you some handy scripts and files you might need.

The scenario is that I am installing FIM 2010 R2 SP1 on Windows Server 2012. This means I will use SharePoint Foundation 2013 for my FIM Portal. I also use the same SQL Server as I use for my FIM Service but use a separate SQL alias for SPF in order to be able to easily move it if required.

All files discussed in this post can be downloaded from my Skydrive (app 52MB). Make sure you read the ReadMeFirst.txt file before you use anything. The configuration script is attached directly in this post if that is all you need.

Prerequisites for SharePoint Foundation 2013

If you just tries to install SPF 2013 on your Windows Server 2012 you will likely fail since the prerequisites installation will probably not succeed. One reason is that .NET Framework 3.5 cannot be added as a feature by the installer since the source files are no longer available for all features in Server 2012.

To install the Roles and Features required you can use the following PS commands.

Import-Module ServerManager

Add-WindowsFeature Net-Framework-Features,Web-Server,Web-WebServer,Web-Common-Http,Web-Static-Content,Web-Default-Doc,Web-Dir-Browsing,Web-Http-Errors,Web-App-Dev,Web-Asp-Net,Web-Net-Ext,Web-ISAPI-Ext,Web-ISAPI-Filter,Web-Health,Web-Http-Logging,Web-Log-Libraries,Web-Request-Monitor,Web-Http-Tracing,Web-Security,Web-Basic-Auth,Web-Windows-Auth,Web-Filtering,Web-Digest-Auth,Web-Performance,Web-Stat-Compression,Web-Dyn-Compression,Web-Mgmt-Tools,Web-Mgmt-Console,Web-Mgmt-Compat,Web-Metabase,Application-Server,AS-Web-Support,AS-TCP-Port-Sharing,AS-WAS-Support, AS-HTTP-Activation,AS-TCP-Activation,AS-Named-Pipes,AS-Net-Framework,WAS,WAS-Process-Model,WAS-NET-Environment,WAS-Config-APIs,Web-Lgcy-Scripting,Windows-Identity-Foundation,Server-Media-Foundation,Xps-Viewer –Source R:\sources\sxs

As you can see that is quite a one-liner and the key part for success is the -Source parameter in the end that needs to point to a valid Server 2012 sources folder, like the DVD. The source path can be managed by setting a group policy but if you would like to do that i ask you to search the web and you will find it.

At one customer I also ran into the problem that the FIM server did not have Internet access and so I was forced to download the prerequisites for SPF 2013 and run the prerequisites installer manually. One thing here is that you cannot manually install the prerequisites, you have to let the SharePoint prerequisites installer do the job. In the download on my Skydrive you will find the required files as well as a cmd file to run the installer. In order to use this you need to extract the SharePoint.exe file into a folder in order to run the prerequisiteinstaller.exe using the command line.

Installing SharePoint Foundation 2013

The installation I did not automate since it is only one choice to make and one thing to remember.

I select to do a Full installation during the setup. This basically adds all required bits and pieces required by SPF but do not configure anything. NOTE! At the end you need to remember to UNCHECK the Run the SharePoint Products Configuration Wizard now. at the end of the installation. We will do this manually.

Configuring SharePoint Foundation 2013 for FIM

If you look at the official TechNet guideline for using SharePoint Foundation 2013 with FIM, you will find that it only points out a few things to remember. But since I also want do the whole configuration for SPF I have created a PowerShell script that does all the things mentioned on TechNet but also all the other things required to use SPF. In order to use it you will need to walk through it and change the passphrase, account names and website names to fit your needs. The script will take a few minutes to run so don’t be alarmed if nothing happens for a while. Once the script is finished your environment is ready for you to install the FIM Portal.

Replacing OpenLDAP MA with PS MA

By replacing the OpenLDAP XMA with the Søren Granfeldt’s PowerShell MA I gained 20-30% performance improvement, got delta import support, and at the same time reduced the amount of managed code by hundreds of lines.

One of my customers are using OpenDJ as a central LDAP directory for information about users and roles. In order to import this information into FIM we have been using the OpenLDAP XMA from codeplex. But, since this MA is built using the deprecated ECMA 1.0 framework and also have some issues causing me to have to re-write it to a customer specific solution, I decided to move away from it and start using a PowerShell MA instead.

I will try to point out the most critical parts of the PowerShell I used if you would like to try this yourself.

But you can also download a sample script to look at if you like.

This PS MA sends three parameters as input to the PowerShell script

param ($Username,

$Password,

$OperationType)

but also holds a RunStepCustomData parameter that can be useful when doing delta imports. If you look at the example downloads

listed at this PS MA homepage you will see some examples using this RunStepCustomData parameter. In this particular case I choose to store the delta cookie in a file instead. Allowing the FIM administrator some flexibility to manually change the cookie value if needed.

Let’s move on to the script then.

I use the System.DirectoryServices.Protocols to do the LDAP queries so I need to start by adding a reference to that assembly.

Add-Type -AssemblyName System.DirectoryServices.Protocols

I need to define the credentials I will use and the LDAP Server to connect to.

$Credentials = New-Object System.Net.NetworkCredential($username,$password)

$LDAPServer = "ldap.company.com"

With that we can now create the LDAP connection to the OpenDJ server.

$LDAPConnection = New-Object System.DirectoryServices.Protocols.LDAPConnection($LDAPServer,$Credentials,"Basic")

Now it’s all about defining the search filters and search the LDAP for the objects we are interested in.It could look something like this

$BaseOU = "ou=People,dc=company,dc=com"

$Filter = "(&(objectClass=EduPerson)(!EduUsedIdentity=*))"

$TimeOut = New-Object System.TimeSpan(1,0,0)

$Request = New-Object System.DirectoryServices.Protocols.SearchRequest($BaseOU, $Filter, "Subtree", $null)

$Response = $LDAPConnection.SendRequest($Request,$TimeOut)

Within the $Response object we now have the result from our search and can iterate through it and set the values to the object returned to the Management Agent.

ForEach($entry in $Response.Entries) {

$obj = @{}

$obj.Add("OpenDJDN", $entry.DistinguishedName)

$obj.Add("objectClass", "eduPerson")

If($entry.Attributes["cn"]){$obj.Add("cn",$entry.Attributes["cn"][0])}

If($entry.Attributes["uid"]){$obj.Add("uid",$entry.Attributes["uid"][0])}

$obj}

You now have a working import from OpenDJ. But we have not added the delta support just yet. Once you have verified that your full import is working you can start to extend your script to also support delta imports from OpenDJ.

OpenDJ works with a changelog that increases the changenumber for each entry. In order to read the changelog from the correct changenumber we need to store the last known changenumber. In my example I store this in what I call the cookie file.

$CookieFile = "D:\PS-Scripts\OpenDJ\Cookie.bin"

In this file I store the integer value of the last changenumber we have worked with.

To read the value I use the following line.

$val= [int](Get-Content –Path $CookieFile)

We can then search the changelog for entries with higher changeNumber than we have already seen.

It would look something like this.

$ChangeFilter = "(&(targetdn=*$($BaseOU))(!cn=changelog)(changeNumber>=$($changeNumber)))"

$ChangeTimeOut = New-Object System.TimeSpan(0,10,0)

$ChangeRequest = New-Object System.DirectoryServices.Protocols.SearchRequest("cn=changelog", $ChangeFilter, "Subtree", $null)

$ChangeResponse = $LDAPConnection.SendRequest($ChangeRequest,$ChangeTimeOut)

We now run into two problems that need to be resolved.

First: The changelog might give me the same object twice if multiple changes has been done to the object.

Second: The changelog object does not contain all the attibutes I need to return to the MA.

The first problem is solved by filtering the response from the search and only collect unique objects.

In my case I used the following line to do that.

$UniqueDN = $ChangeResponse.Entries | ForEach{$_.attributes["targetdn"][0]} | Get-Unique

We then need to iterate through these unique DNs to get the actual objects and get the attributes we need.

ForEach($DN in $UniqueDN){

$GetUserReq = New-Object System.DirectoryServices.Protocols.SearchRequest($DN, "(objectClass=EduPerson)", "Base", $null)

$GetUser = $LDAPConnection.SendRequest($GetUserReq)

If($GetUser.Entries.Count -eq 0){continue}

$entry = $GetUser.Entries[0]

If($entry.Attributes["EduUsedIdentity"]){continue}

$obj = @{}

$obj.Add("OpenDJDN", $entry.DistinguishedName)

$obj.Add("objectClass", $Class)

If($entry.Attributes["cn"]){$obj.Add("cn",$entry.Attributes["cn"][0])}

If($entry.Attributes["uid"]){$obj.Add("uid",$entry.Attributes["uid"][0])}

$obj}

One thing we need to also remember to do is to save the last changenumber back to our cookie file for the next run.

$LastChangeNumber = [int]$ChangeResponse.Entries[($ChangeResponse.Entries.Count -1)].Attributes["changeNumber"][0]

Set-Content -Value $LastChangeNumber –Path $CookieFile

You also need to remember to do that during your full import runs. So within the script where you process full imports you need to add a search to get the current latest entry in the changelog.

I have attached a demoscript for you to download to get you started in your own exploration of using PowerShell to work with OpenDJ. If you look at it you will likely find that it can be optimized in some ways. But I kept it this way to make it easy for non PowerShell geeks to be able to read it and understand it.

One thing you might have notice is that I have not added support to detect deletes. This is just a matter of adding some logic to read the changetype in the changelog, but I have not yet got the time to do this. At this customer deletes will be detected every night when full import is running anyhow.

ForEach vs ForEach-Object in PowerShell

In a current project where I use the PowerShell Management Agent from Søren Granfeldt to import information from a large LDAP catalog I discovered that there are some performance problems if you use PowerShell the incorrect way. One of these things is the use of ForEach vs ForEach-Object when enumerating a large collection of objects.

Searching the web I found this article from Anita, that helped me.

The results was stunning!

Look at this scenario where I search for objects in the LDAP catalog and the search returns +20 thousand objects.

I got $Response.Entries.Count is 21897

I then use the Measure-Command to compare the ForEach with the ForEach-Object way of iterating the objects.

First let’s see how the generic ForEach-Object{} is doing.

(Measure-Command{$Response.Entries | ForEach-Object{$_.DistinguishedName} }).TotalMilliseconds

Resulted in: 1020 milliseconds

And then let’s see how ForEach(){} is doing when defining the type of object in the collection

Defining the entry object type like this

[System.DirectoryServices.Protocols.SearchResultEntry]$entry

and then measuring the result

(Measure-Command{ForEach($entry in $Response.Entries){$entry.DistinguishedName} }).TotalMilliseconds

Resulted in: 98 milliseconds

A performance factor of 10!.

And since a few of my collections in this project was actually returning more than 200 thousand objects you can imagine that I actually was able to see some effect.

FIM 2010 R2 SP1 – 4.1.3114.0 – is Released

Today Microsoft made the FIM 2010 R2 SP1 release available for general download. Read all about the news in SP1 in KB2772429.

I myself ran into a situation at a customer yesterday where the binding redirect for the new version where not applied by the SP1 installer. As described in the KB this happens if some modifications has been done to the configuration files.

Updating the three files

- miisserver.exe.config

- mmsscrpt.exe.config

- dllhost.exe.config

so that the bindingRedirect section had the newVersion=”4.1.0.0″ solved the problem and made the ECMA 2 adapters in the solution start working again. I actually first made the mistake of using 4.1.3114.0 as newVersion, since this is the Product Version of the new Microsoft.MetadirectoryServicesEx.dll file. But this is not the “Build Number” that we are talking about. The Build Number you need to redirect to is the 4.1.0.0 that you can see in the GAC on the Microsoft.MetadirectoryServicesEx.dll.