When starting to use Office 365 in large scale you soon realize that although DirSync will solve most of your synchronization needs it will not solve the problem of assigning the correct license to different users. In this post I will show you how I solved this problem using FIM 2010 with a PowerShell MA.

The problem at hand was not only to license unlicensed users but to manage licenses in the respect of changing the license and removing the license based on information in FIM.

Background information

The customer has almost 60,000 users in FIM. In that we have 900 staff and a little over 57,000 students.

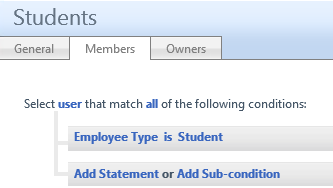

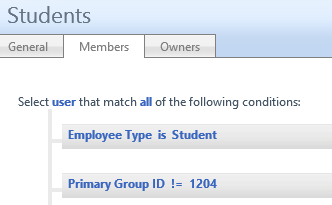

In FIM we use the employeeType attribute to decide the type of user. In this context we have three different values to consider, Staff, Student and Alumni. Each type should have a different license assigned to them. And since typically a Student will eventually become an Alumni we need to manage that transformation as well.

In FIM we have also created a simple boolean attribute called off365Licensed. This attribute is the main “switch” to decide if you should have a license at all.

Office 365 Licenses

Different subscriptions in Office 365 gives you different licenses to work with so keep in mind that this post only shows one type of subscription. The EDU subscription gives you a couple of “free” licenses to use but in order to use them we need to learn how they look since we actually will need to disable parts of some licenses in most cases.

As an example I will show you the Faculty licenses that are “free”.

You have the STANDARDWOFFPACK_FACULTY and also the PROJECTONLINE_PLAN_1_FACULTY.

But they consist of different parts.

The STANDARDWOFFPACK_FACULTY has four parts in it

- SHAREPOINTWAC_EDU

- MCOSTANDARD

- SHAREPOINTSTANDARD_EDU

- EXCHANGE_S_STANDARD

The PROJECTONLINE_PLAN_1_FACULTY has three parts in it

- SHAREPOINT_PROJECT_EDU

- SHAREPOINTWAC_EDU

- SHAREPOINTENTERPRISE_EDU

The problem here is that they overlap and both contain SharePoint licenses that cannot be assigned at the same time. So in our scripts we need to disable parts of the licenses that we cannot assign if we also want to assign the other.

PowerShell MA

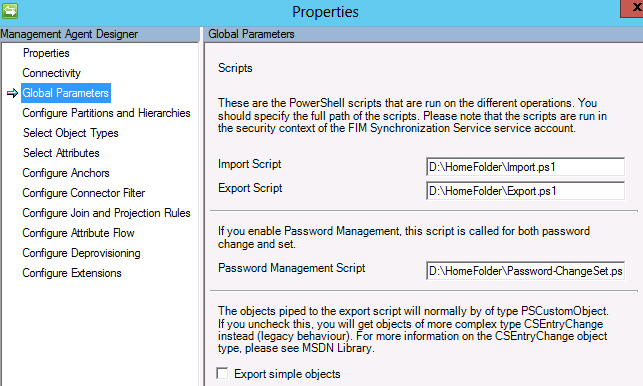

We decided to use the PowerShell MA developed by Sören Granfeldt.

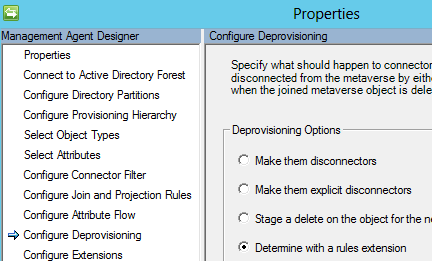

I do not do Provisioning and De-provisioning in this case, that part I leave to DirSync. In this MA we import the users, join them to the corresponding user in the MetaVerse and then make sure the correct license is assigned.

Below I show you some parts of the scripts involved but please download O365MAScripts to get the complete scripts.

Schema

The Schema script in this case is quite simple. I used the UPN as anchor in this case.

$obj = New-Object -Type PSCustomObject $obj | Add-Member -Type NoteProperty -Name "Anchor-UPN|String" -Value 1 $obj | Add-Member -Type NoteProperty -Name "objectClass|String" -Value "user" $Obj | Add-Member -Type NoteProperty -Name 'IsLicensed|Boolean' -Value $true $Obj | Add-Member -Type NoteProperty -Name 'LicenseType|String' -Value "Staff" $obj

Import Script

The import script imports the current MsolUsers

$Users = Get-MsolUser -MaxResults 50000 -DomainName company.com

but also checks the current license assigned

if($user.IsLicensed)

{

if($User.Licenses.AccountSkuId[0].EndsWith("FACULTY"))

{$obj.Add("LicenseType", "Staff")}

elseif($User.Licenses.AccountSkuId[0].EndsWith("STUDENT"))

{$obj.Add("LicenseType", "Student")}

elseif($User.Licenses.AccountSkuId[0].EndsWith("ALUMNI"))

{$obj.Add("LicenseType", "Alumni")}

else {$obj.Add("LicenseType", "Unknown")}

}

Export

The export script picks up the users from O365

$msoluser=Get-MsolUser -UserPrincipalName $_.DN $IsLicensed=$msoluser.IsLicensed

I do not bother to look at the current license of the users since changing licenses involves removing the old license and adding the new license. My script just removes the old licenses and assigns the correct ones.

Removing old licenses can be done in a single line

Set-MsolUserLicense -UserPrincipalName $msolUser.UserPrincipalName -RemoveLicenses $msoluser.Licenses.AccountSkuId

Adding the correct license involves defining the licenses including the excludes discussed earlier in this post.

Please note that before we can assign any licenses we need to set the UsageLocation on the user.

if(!$msoluser.UsageLocation)

{Set-MsolUser -UserPrincipalName $msolUser.UserPrincipalName -UsageLocation $DefaultUsageLocation;}

We then assign the license depending on the employeeType in FIM.

if($LicenseType -eq "Staff")

{

Set-MsolUserLicense -UserPrincipalName $msolUser.UserPrincipalName -AddLicenses $STANDARDWOFFPACK_FACULTY -LicenseOptions $FacultyStnd;

Set-MsolUserLicense -UserPrincipalName $msolUser.UserPrincipalName -AddLicenses $PROJECTONLINE_PLAN_1_FACULTY;

}

elseif($LicenseType -eq "Student")

{

Set-MsolUserLicense -UserPrincipalName $msolUser.UserPrincipalName -AddLicenses $STANDARDWOFFPACK_STUDENT -LicenseOptions $StudStnd;

Set-MsolUserLicense -UserPrincipalName $msolUser.UserPrincipalName -AddLicenses $PROJECTONLINE_PLAN_1_STUDENT;

}

elseif($LicenseType -eq "Alumni")

{

Set-MsolUserLicense -UserPrincipalName $msolUser.UserPrincipalName -AddLicenses $EXCHANGESTANDARD_ALUMNI;

}

else

{

throw "Unknown LicenseType"

}

Synchronization Rule

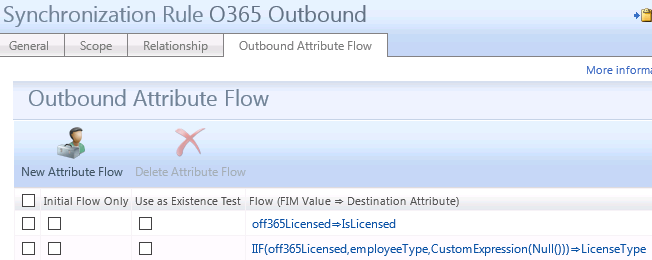

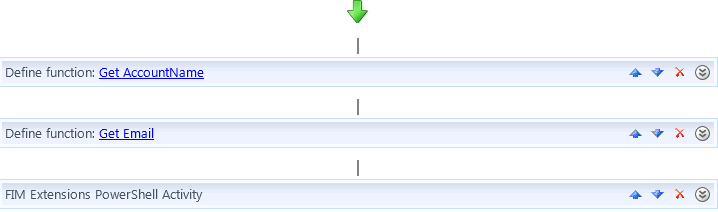

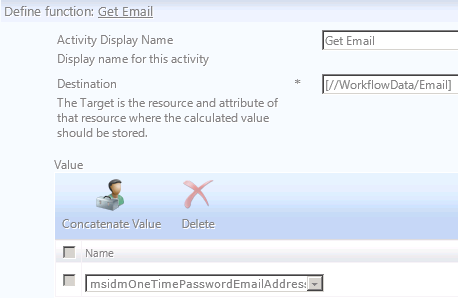

Finally we need to configure the Outbound Synchronization rule. We need two flows and I need a condition on the LicenseType to only set in on Licensed users.

Conclusion

In this post I have showed you one example on how to manage Office 365 licenses. As you can imagine this task can grow substantially if we also were to manage individually selectable licenses in some way. We have discussed this at this particular client, using the FIM portal for self-service to add additional licenses. That would off course require some major changes to current license management.